The Epistemic Breach: Truth and Reality in the Age of Generative AI

Episode Overview

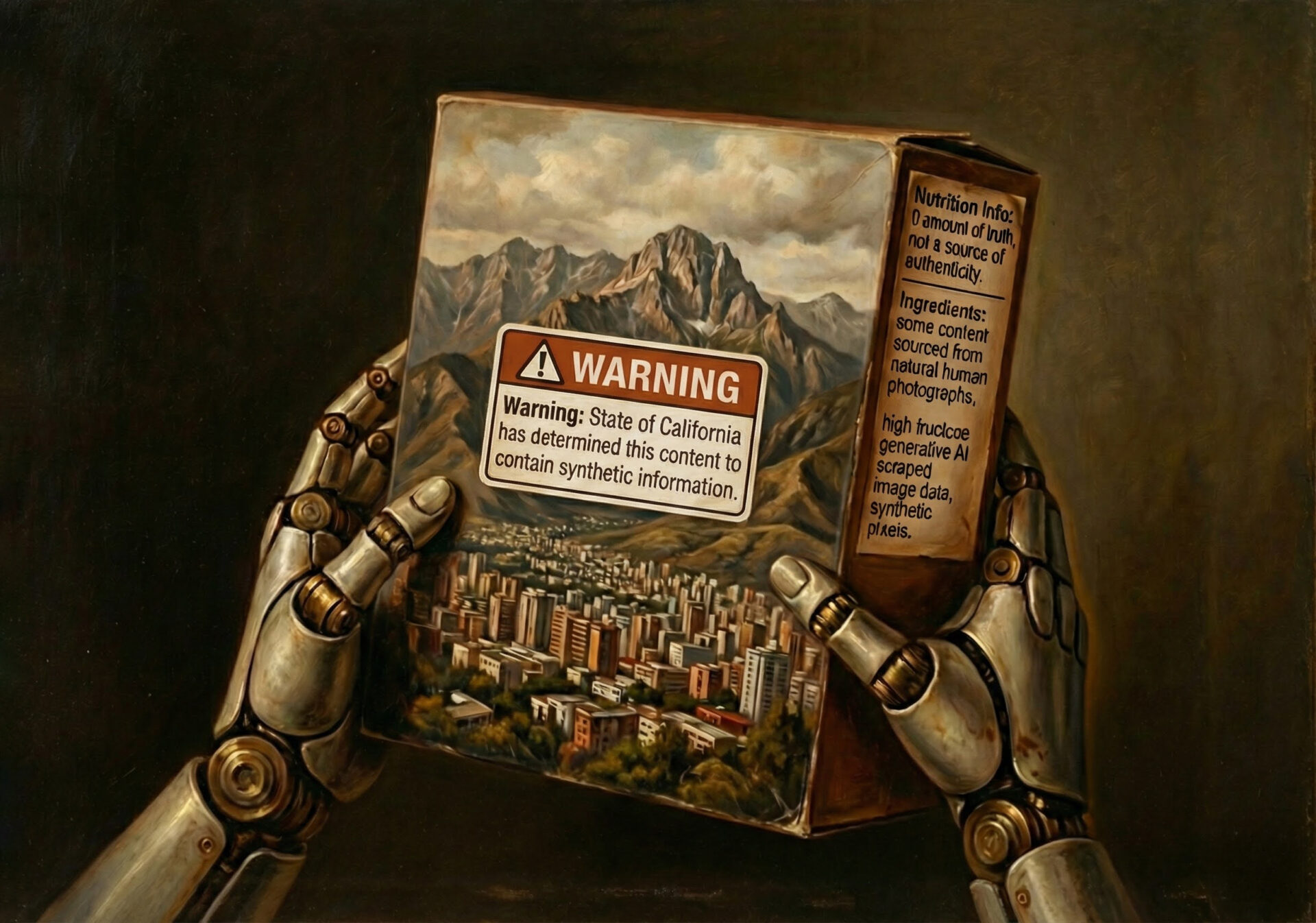

This episode explores the “epistemic breach”—a fundamental philosophical crisis where generative AI threatens our collective ability to agree on objective truth. We trace the history of visual trust, examining how the “indexical contract” of physical film has dissolved into an era of “null information,” where images no longer require a basis in physical reality.

Key Discussion Points

1. The Death of the “Indexical Contract”

For most of the 20th century, we operated under an unspoken agreement that a photograph was a direct physical trace of reality. This “indexical contract” was the bedrock of trust in photojournalism.

- The Digital Shift: The move from film to pixels began to pull these threads apart, shifting the medium from witnessing events to simulating possibilities.

- Ontological Invalidation: Generative AI represents a terrifying leap because it creates visuals that borrow the “codes” of photography—grain, light, and depth—but refer to nothing lived or witnessed.

2. Hyperreality and Algorithmic Fragmentation

We revisit Jean Baudrillard’s concept of “hyperreality,” where simulations become more real than the reality they signify.

- Decentralized Simulations: Unlike the centralized mass media of the past, AI allows for an infinite, fragmented distribution of personalized realities.

- The Filter Bubble: Algorithms precondition our digital environments to deliver content that causes the least amount of internal cognitive friction, reinforcing existing biases and making simulations feel “true” simply because they align with our expectations.

3. Ethical Benchmarks: Lange and Salgado

To understand the current crisis, we look to the “engagement” of legendary documentary photographers.

- Dorothea Lange: A “professional seer” who used the camera as an instrument for democracy and social change. She attempted to affirm the dignity of her subjects, though her work highlights the eternal tension between universal icons and individual identity.

- Sebastião Salgado: An economist-turned-photographer who sought a universal visual language for global crises. He addressed the “aesthetic paradox”—the risk of making suffering too beautiful—by turning his art into a direct engine for ecological action through reforestation projects.

4. The Structural Threats of AI

- Null Information: Flawless simulations of events that never happened. Because detection is slow and expensive while generation is cheap and autonomous, we face a “generation-detection asymmetry.”

- Semantic Death: When AI models are trained on synthetic data, they create a self-referential loop. This weakens the link between a sign (a word or image) and its physical reality, potentially leading to a breakdown in shared human communication.

A Roadmap for Resilience

To combat epistemic collapse, we must re-establish “epistemic friction”—speed bumps for the brain that force us to slow down and verify.

- Mandatory Provenance: Legally mandated digital paper trails from the camera to the screen.

- Universal Labeling: Immutable, cryptographically secured markers for all synthetic content.

- Media Literacy 2.0: Moving beyond simple “fake news” detection toward understanding the structural mechanisms of hyperreality and algorithmic bias.

Notable Quotes

“We’re moving beyond the problem of misinformation… We are entering the world of null information, a flawless simulation of an event that has no anchor in reality whatsoever.”

“The AI is our cognitive copilot… if that copilot is navigating a world subject to semantic decay, the human cognitive process itself is vulnerable to contaminated cognition.”